Authors:

(1) Simone Silvestri, Massachusetts Institute of Technology, Cambridge, MA, USA;

(2) Gregory Wagner, Massachusetts Institute of Technology, Cambridge, MA, USA;

(3) Christopher Hill, Massachusetts Institute of Technology, Cambridge, MA, USA;

(4) Matin Raayai Ardakani, Northeastern University, Boston, MA, USA;

(5) Johannes Blaschke, Lawrence Berkeley National Laboratory, Berkeley, CA, USA;

(6) Valentin Churavy, Massachusetts Institute of Technology, Cambridge, MA, USA;

(7) Jean-Michel Campin, Massachusetts Institute of Technology, Cambridge, MA, USA;

(8) Navid Constantinou, Australian National University, Canberra, ACT, Australia;

(9) Alan Edelman, Massachusetts Institute of Technology, Cambridge, MA, USA;

(10) John Marshall, Massachusetts Institute of Technology, Cambridge, MA, USA;

(11) Ali Ramadhan, Massachusetts Institute of Technology, Cambridge, MA, USA;

(12) Andre Souza, Massachusetts Institute of Technology, Cambridge, MA, USA;

(13) Raffaele Ferrari, Massachusetts Institute of Technology, Cambridge, MA, USA.

Table of Links

5.1 Starting from scratch with Julia

5.2 New numerical methods for finite volume fluid dynamics on the sphere

5.3 Optimization of ocean free surface dynamics for unprecedented GPU scalability

6 How performance was measured

7 Performance Results and 7.1 Scaling Results

9 Acknowledgments and References

Abstract

Climate models must simulate hundreds of future scenarios for hundreds of years at coarse resolutions, and a handful of high resolution decadal simulations to resolve localized extreme events. Using Oceananigans.jl, written from scratch in Julia, we report several achievements: First, a global ocean simulation with breakthrough horizontal resolution — 488m — reaching 15 simulated days per day (0.04 simulated years per day; SYPD). Second, Oceananigans simulates the global ocean at 488m with breakthrough memory efficiency on just 768 Nvidia A100 GPUs, a fraction of the resources available on current and upcoming exascale supercomputers. Third, and arguably most significant for climate modeling, Oceananigans achieves breakthrough energy efficiency reaching 0.95 SYPD at 1.7 km on 576 A100s and 9.9 SYPD at 10 km on 68 A100s — the latter representing the highest horizontal resolutions employed by current IPCC-class ocean models. Routine climate simulations with 10 km ocean components are within reach.

1 Justification

Oceananigans.jl — a new ocean model written from scratch in Julia — achieves ocean simulations with breakthrough resolution, memory and energy efficiency, realizing 0.041 simulated years per day (SYPD) at 488 m on 768 Nvidia A100s, 0.95 SYPD at 1 km on 576 A100s, and 9.9 SYPD at 10 km on 68 A100s.

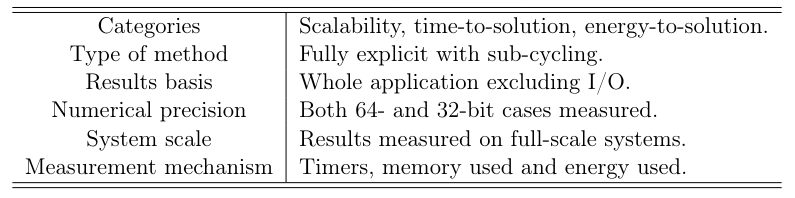

2 Performance Attributes

3 Overview of the Problem

Climate models are essential for predicting where, when, and how climate change threatens Earth’s ecosystems and human civilization. But current climate models, which capture only the broadest aspects of global warming, fall far short of providing the needed accuracy and granularity required to design and implement costly adaptation and mitigation strategies [14]. Significant reduction of the uncertainty of climate predictions is potentially worth trillions of dollars [20].

Climate models simulate the three-dimensional fluid dynamics, thermodynamics, chemistry, and biology of the atmosphere, ocean, and land to predict the hydrological cycle, carbon cycle and the net energy imbalance of the Earth system. While typical climate models use coarse resolutions of 25-100 km to simulate the numerous climate scenarios required by the Intergovernmental Panel on Climate Change (IPCC) [25], a handful of state-of-the-art climate simulations have been performed at higher resolutions of O(10 km) at astronomical expense. At either resolution there are many processes, such as clouds and ocean turbulence, that cannot be explicitly simulated and are instead approximated by empirical formulae called parameterizations. Biases due to inadequate parameterizations dominate the uncertainty of climate predictions over the next few decades [34, 23].

The prevailing strategy to reduce climate model uncertainty is to refine model resolution as much as possible [34]. For example, at horizontal resolutions of 1 km a substantial fraction of atmospheric convection and ocean turbulence are explicitly modeled by Newton’s laws of motion, greatly reducing the impact of parameterizations [34]. High-resolution climate modeling is further required to make predictions for specific regions, providing information for local decision makers on adaptation and mitigation [14].

Yet the “resolution strategy” is fundamentally limited: even at 1 km resolution many climate-relevant physical processes remain unresolved [49]. Worse, processes such as sea ice dynamics, biology, or cloud-aerosol interaction will never be resolved because accurate macroscopic laws do not exist. Absent theoretical breakthroughs, such “irreducible” uncertainties can be addressed only by leveraging Earth system observations through advances in data assimilation and machine learning [41]. Data driven optimization of climate models requires ensembles of climate predictions, rather than single predictions at the highest affordable resolution. Ensembles of simulations are also required to explore emission scenarios and to estimate the impact of initial condition uncertainty and internal variability.

Consequently, reducing the uncertainty of climate predictions demands not just higher resolution, but more efficient resource utilization to enable hundreds to thousands of relatively high-resolution simulations. As an example, we consider the computational requirements to enable 100-simulation ensembles using all 37,888 AMD MI250 GPUs of the Frontier exascale supercomputer: completing an ensemble of 300-year simulations (200 years of spin-up + 100 years of prediction) within one month of wall clock time requires a climate model that can achieve 10 simulated years per day (SYPD) using 378 GPUs, or 1/100th of Frontier’s resources. Disruptive progress on climate modeling requires not just scalable performance for a single, high-resolution simulation, but advances in efficiency to meet this ensemble-based “10 per 100th” benchmark [40].

Our submission uses the ocean component of a new climate model being developed by the Climate Modeling Alliance [44]. The ocean contributes key uncertainty to climate predictions due to its prominent role in the Earth system’s heat and carbon cycles. At 10 km resolutions, where ocean model uncertainties are significantly reduced, the ocean often is the most expensive climate model component [19]. This calls for a step change in ocean model performance.

This paper is available on arxiv under CC BY 4.0 DEED license.